Abstract

The rapid advancement of digital health innovation, including Artificial Intelligence (AI), is transforming healthcare. The growing role the European Union (EU) plays in regulating the use of AI in healthcare renders national laws insufficient to safeguard patients from unique AI-related risks. This underscores the urgent need for the recognition of a canon of patients’ rights in the scope of EU law. This paper proposes the blueprint for an EU Charter for Digital Patients’ Rights, consolidating and adapting existing rights for patients to address these specific challenges. Traditional patients’ rights such as the right to privacy and the right to informed consent are interpreted in light of the challenges posed by AI. It also proposes novel rights for patients, such as the right not to be subject to automated medical decision-making and the right to meaningful human contact. This paper highlights the EU’s central role in protecting patients’ rights and outlines strategies for effective implementation. This comprehensive approach aims to address the current shortcomings and enhance the legal framework governing digital health in Europe.

Hannah van Kolfschooten

Cite as: van Kolfschooten, H. Towards an EU Charter of Digital Patients’ Rights in the Age of Artificial Intelligence. Digital Society 4, 6 (2025). https://doi.org/10.1007/s44206-025-00159-w

1 Introduction

Digital health innovation is thriving. Artificial Intelligence (AI) systems are increasingly integrated into healthcare, for instance, in diagnostic tools for skin cancer, (Krakowski et al., 2024) models predicting the disease progression of Alzheimer’s disease, (Yi et al., 2023) models predicting patients’ response to specific antidepressants, (Poirot et al., 2024), and generative AI systems documenting notes on patient visits (Blease et al., 2024). Healthcare is currently one of the top areas for AI in the European Union (EU), as AI is expected to benefit diagnostics, treatment and care.Footnote1 This rapid growth underscores the urgency of addressing the rights of patients subjected to AI in healthcare, as new technology may create novel (and unforeseen) challenges.

Indeed, experts are concerned about the risks AI poses to the rights of patients (van Kolfschooten, 2022a). While this paper primarily examines the challenges posed by AI in healthcare, it situates these within the broader context of digital health innovation, including technologies like telemedicine and other digital advancements that shape patients’ rights. Digitalisation in healthcare encompasses a spectrum of innovations that collectively present opportunities and challenges for protecting patients’ rights. For example, studies show how AI systems are more likely to produce incorrect predictions for members of marginalised population groups because their data is often underrepresented in the underlying datasets (Lau, 2023; Medrano et al., 2023). Moreover, in cases where medical AI systems exhibit such adverse health outcomes (Duffourc & Gerke, 2023) or cause rights violations,Footnote2 it is not always clear to patients how to protect themselves. This may be exacerbated by the lack of uniform legislation on the protection of the rights of patients in Europe. This paper examines whether the EU is in need of a consolidated legal framework on patients’ rights to address these specific challenges.

While generally, the importance of the rights of patients is recognised in all EU Member States and reflected in (inter)national legislation and guidelines, a uniform legal framework at the level of the EU is currently absent (Palm et al., 2020). The patients’ rights framework applicable to EU Member States consists of a patchwork of national legislation (Townend et al., 2018), various international human rights instruments and related jurisprudence,Footnote3 and EU secondary laws on health-related topics.Footnote4 Specifically, when AI is deployed for healthcare, the individual rights that patients are entitled to in the course of their medical treatment are scattered across these frameworks, causing uncertainty about their applicability, scope, and enforceability (Mira et al., 2012). Moreover, this patchwork of patients’ rights creates divergent levels of protection between European patients, which could amplify health inequity within the EU (Toebes, 2021). To this end, this paper proposes consolidation through the adoption of a special legal instrument for patients’ rights in the scope of EU law.

More specifically, the growing integration of AI in healthcare may necessitate adaptation of existing rights of patients—or the creation of new rights. Against this background, this paper outlines the blueprint for an ‘EU Charter for Digital Patients’ Rights’ in response to the challenges posed by the age of AI. Apart from the fragmented nature of the regulatory framework, this Charter is needed because current patients’ rights—as protected in national healthcare settings—are not necessarily adapted to the new algorithmic reality of healthcare. To illustrate, the few existing ‘soft law’ instruments on patients’ rights in Europe, such as the WHO Declaration on the Promotion of the Rights of the Patient in Europe (1994), are more than twenty years old and are thus not concerned with AI-specific risks.Footnote5 Besides, neither the newly adopted EU AI Act nor the Council of Europe’s AI Convention, explicitly addresses the healthcare-specific risks AI poses to patients. The main reason for this is that both instruments apply across all sectors. The EU AI Act focuses on the safety and quality of the product, primarily stipulating rules for developers instead of rights for individuals.Footnote6 The AI Convention does introduce individual rights—but (for now) only applies to the public sector, thus generally excluding healthcare in most EU Member States (van Kolfschooten & Shachar, 2023).Footnote7

The importance of strengthening the rights and interests of patients in relation to new technology is recognised more broadly and has been increasingly pointed out by international organisations. In July 2023, the UN Special Rapporteur on the right to health highlighted the broader challenges of digital innovation, including AI, telemedicine, and electronic health record systems, which collectively influence patients’ rights and access to care. These considerations underline the importance of addressing not only AI but the broader spectrum of digital health technologies in patient rights frameworks (Human Rights Council, 2023). In October 2023, the World Health Organization (WHO) released a report outlining the key considerations for regulation of AI in healthcare, flagging its threats to informed medical decision-making by patients, personal data protection, and discrimination (WHO, 2023). In January 2024, the OECD published a report titled “AI in Health: Huge Potentials, Huge Risks”, underlining the need to address key risks of AI in health, such as unclear accountability, biased and opaque algorithms, and leaks of sensitive personal data (OECD, 2024). In the same vein, in March 2024, the Lancet Commission on Health and Human Rights published a critical report, calling for an adaption of human rights to the 21st century in response to serious negative effects on health resulting from political, social, and technological developments and shortcomings in current frameworks (Beyrer et al., 2024). This criticism resembles the broader trend of the ‘digital rights movement’.Footnote8

An EU Charter for Digital Patients’ Rights could ensure uniform protection against the unique risks AI presents in healthcare. In this light, this paper proposes to update ‘traditional’ patients’ rights and evaluates the inclusion of five new iterations of rights adapted to the specific context of healthcare and digital developments. At the same time, it is acknowledged that establishing a new charter alone does not ensure effective implementation in medical practice and thus discusses potential strategies to overcome this hurdle.

The paper is structured as follows. Section 2 examines the need for patients’ rights protection by assessing the implications of AI. Section 3 explores whether the algorithmisation of healthcare necessitates the adaptation of patients’ rights. In this light, it draws inspiration from the ‘digital rights movement’ for the establishment of potential new rights. Subsequently, Sect. 4 examines whether the EU is in the best position to adopt legislation to protect the rights of patients. Subsequently, Sect. 5 puts forward the blueprint for an EU Charter for Digital Patients’ Rights. Section 6 concludes.

2 How AI Challenges Health and Patients’ Rights

2.1 Health Risks of Technical and Contextual Biases in AI

While healthcare is often presented as the most promising use case for AI, the increasing use of disruptive technologies in healthcare—such as AI—also presents significant challenges to the protection of the health and rights of patients in Europe. For example, when AI tools assist health professionals in diagnostics, health risks can materialise due to the inherent characteristics of AI.Footnote9 A primary concern is the tendency of AI systems to exhibit technical biases that are often ‘built into’ the system. This issue arises, for instance, when training datasets underrepresent certain population groups, such as women, black people, or patients with rare diseases—potentially missing the correct diagnosis for individuals in these groups. Inaccuracies in dataset labeling (e.g. mislabeling benign tumours as malignant) can also misguide the AI’s training and result in erroneous diagnoses (Logan et al., 2023). Furthermore, the model itself can be biased, for example, an AI tool to diagnose cardiac arrest in emergency calls could be programmed to give more weight to chest pain than shortness of breath (Blomberg et al., 2019). As chest pain is typically experienced by men suffering from cardiac arrest, and shortness of breath by women, the AI tool is more likely to correctly diagnose the male caller (Greenwood et al., 2018). Recent reports submitted to the AI Incident DatabaseFootnote10 related to technical biases include (1) the WHO’s AI chatbot ‘S.A.R.A.H.’ (described as a ‘virtual health worker’Footnote11) often provided incorrect answers, especially on the topic of mental health, (2) an analysis of AI models to predict the prognosis or course of infection in patients with Covid-19 showed that the majority produced inaccurate predictions, and (3) an AI chatbot designed to provide mental health advice to children responded inappropriately to their reports of child abuse (Khawaja & Bélisle-Pipon, 2023; Wynants et al., 2020). All of these examples exhibit significant risks to the human health of patients.

Second, AI systems may exhibit contextual biases. This can happen when, for example, the AI system is deployed in a significantly different clinical setting or used for a different patient population than it was originally trained for (Nakagawa et al., 2023). This can also occur when the design of the AI system excludes certain patients from using it effectively, for example, if it requires the newest smartphone (Shaw, 2023). External factors can create further risks to patients’ health status, for instance, when the radiologist over-relies on the AI tool’s outcome and does not consider other relevant patient information or perhaps does not have enough training and knowledge to adequately interpret the results provided by the AI system (Evans & Snead, 2024). In other words: AI systems can generate biases in all phases of the AI lifecycle. This reinforcing loop can be described as the ‘AI cycle of health inequity’: existing discriminatory practices are programmed into AI systems that replicate these biases in their output, eventually resulting in health inequity—avoidable—and therefore unfair—systematic differences in the health status of different population groups.Footnote12

2.2 Adverse Effects of AI for Patients’ Rights Protection

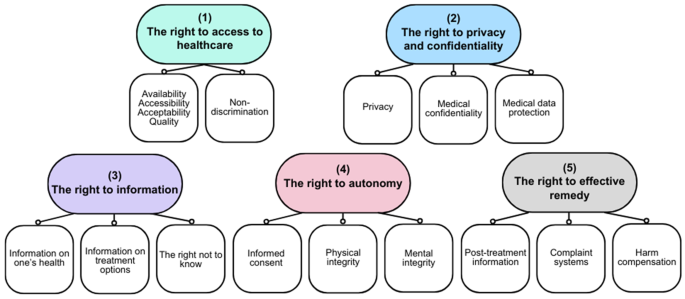

In turn, the health risks as described above, can infringe on the rights of patients involved (Cathaoir, 2022). Patients’ rights in EU Member States are protected through a fragmented, multi-layered system. While each EU Member State has its unique set of laws and policies that define and protect patients’ rights, the national patients’ rights frameworks are in large part made up of supranational frameworks, such as health-themed EU Directives,Footnote13 the legislation of the Council of Europe of which all EU Member States are part,Footnote14 international treaties such as the International Health Regulations,Footnote15 and ‘soft law’ instruments such as professional guidelines. The exact interpretation of rights varies from one Member State to another, reflecting differences in legal systems, healthcare organisation, and cultural values. Because of these shared international norms, and the common roots in bioethical principles, it is however possible to deduct a set of ‘core’ patients’ rights that are recognised in all EU Member States,Footnote16 clustered in five categories. As seen in Fig. 1 below, all EU Member States protect rights in the following categories: (1) the right to access to healthcare; (2) the right to privacy and confidentiality; (3) the right to information; (4) the right to autonomy; and (5) the right to effective remedy. The identification of core patients’ rights is based on a combined reading of the various—non-legally binding—patients’ rights frameworks that have been published over the years: the rights enshrined in the WMA Declaration of Lisbon on the Rights of the Patient (2015)Footnote17, the Active Citizenship Network’s European Charter of Patients’ Rights (2002),Footnote18 and the WHO Europe Declaration on the Promotion of the Rights of Patients in Europe (1994).Footnote19 The scope and interpretation are determined by general human rights instruments and related case lawFootnote20 and health-specific supranational legislation.Footnote21

First—while AI can also improve access due to its capacity to speed up medical decisions and enable remote care—the right to access to healthcare is potentially infringed when AI systems, influenced by technical biases, underrepresent certain demographic groups. For example, an AI diagnostic tool biased against Hispanic and Asian women may lead to underdiagnosis, directly limiting these patients’ access to necessary treatments and healthcare services (Celeste et al., 2023). This also constitutes a clear violation of the right to non-discrimination, as AI may favour certain patient populations over others, creating systematic barriers to healthcare access for marginalised groups (Takshi, 2020). The opaque functioning of AI makes it difficult to identify and correct biases. Indeed, AI exhibits new forms of discrimination not directly protected by law, such as ‘proxy discrimination’—differentiating on the basis of seemingly neutral categories, which however disproportionately harms members of a protected class (e.g. postal codes) (Prince & Schwarcz, 2020; Schwarcz, 2021). For example, in the CHEZ case (Case C-83/14 at para. 56), where the State had placed electricity meters in a district that was commonly known for its Roma population at an inaccessible height, the Court of Justice of the EU (CJEU) referred to this practice as ‘discrimination by association’. In the same vein, contextual biases can limit access to healthcare for certain individuals, for example when the design of the AI system poses hurdles due to limited digital skills or restricted financial resources (Ahmed et al., n.d.; Finco et al., 2024).

Second, the right to privacy and confidentiality could be compromised by AI systems, due to their processing and handling of vast amounts of personal health data. By monitoring and tracking various bodily functions and physical activities, potentially combined with data from external sources, AI systems can create detailed and intimate profiles about an individual’s health. This can be used to make elaborate predictions about a person’s health status and development. It is questionable to what extent patients can exercise control over who can access their data—also because applicable data protection regimes such as the EU General Data Protection Regulation (GDPR) do not always require the patient’s consent for processing. In any case, informed consent as a mechanism to protect the confidentiality of health data is not always effective in practice, as empirical research reveals that people often click consent without reading the conditions (van Kolfschooten, 2022b). Moreover, the increasing complexity of AI systems and data flows, compromises the capacity of individuals to understand what they consent to. Anonymisation of personal data—another solution frequently proposed to protect privacy—is not always technically possible for health data (Ghazarian et al., 2021). In practice, effective data protection primarily depends on adequate enforcement—which is often missing for the GDPR (Daigle & Khan, 2020).

Third, the right to information is affected when AI systems function as ‘black boxes’: it is not clear how exactly the system arrived at a certain decision for a specific patient. This lack of transparency can impair patients’ understanding of their health status or the available medical interventions. When the healthcare professional cannot provide the patients with the relevant information, this may affect the level of trust in the patient-health professional relationship (Kim & Routledge, 2022). Moreover, when medical AI exhibits biases, it could provide irrelevant, inaccurate, or incomplete health information to certain patients. Finally, through its ability to find new patterns in large datasets, AI could also generate new insights into the health status of individuals—potentially violating their right not to know (Davies, 2020).

Fourth, while AI could potentially improve autonomy because of its capacity to personalise healthcare, the increasing use of AI may also jeopardise the right to autonomy. The right to informed consent does not only play a role in relation to the processing of personal data but is also an essential part of the right to autonomy. Patients need to understand the implications of their medical decisions to provide informed consent to medical treatment. When neither the healthcare professional nor the patients can explain or understand how an AI-generated diagnosis was made, for example, due to the ‘black-box’ nature of the AI or limited digital skills, this may affect the level of trust in the patient-health professional relationship, which may influence the patient’s decision to seek care (Durán & Jongsma, 2021). Biases in AI systems, such as AI diagnostic tools, can compromise patient autonomy by providing inaccurate or incomplete information that influences the patient’s medical decisions. For example, a misdiagnosis due to biased AI may result in the patient’s choice of treatments that may not be necessary or effective, thereby violating their physical and mental integrity (Agarwal et al., 2022; Lau, n.d.). Indeed, it is doubtful whether patients—and health professionals alike—can even refuse the use of AI systems while maintaining access to high-quality healthcare (Ploug & Holm, 2020a, b).

Finally, the emerging use of AI in healthcare may infringe on the right to effective remedy. If AI systems have caused harm, either in the form of physical harm or violation of human rights, this is difficult for the affected patient to prove. In the case of physical harm, proving causality can be problematic, while for rights violations, patients do not always have (1) knowledge about the violation (e.g. in the case of illegitimate data sharing with third parties) or (2) access to the data that is necessary to prove the violation (e.g. the training dataset showing how information related to their population group was not included). The opacity of AI makes it hard to identify and prove breaches (Duffourc & Gerke, 2023).

In conclusion, the increasing use of AI technologies in healthcare may undermine the protection of the health and rights of patients in Europe. At the same time, it can be concluded from the above that the codification of existing rights may not suffice to protect against the new challenges posed by AI systems, as precisely these rights are threatened by the increasing integration of AI technologies in healthcare settings. Similar challenges arise with other digital health technologies, such as telemedicine, which can exacerbate inequities due to disparities in digital access and literacy. These issues highlight the need for a comprehensive approach that addresses the interconnected challenges of AI and broader digital innovations in healthcare. The next Section explores whether the algorithmisation of healthcare necessitates the adaptation of patients’ rights—or the adoption of new patients’ rights—and what this could look like.

3 Adapting Patients’ Rights to the Age of AI

3.1 Responding to Political, Social, and Technological Transformations

The human rights community has been increasingly calling for a renewal of ‘the paradigm of human rights and health’, responding to major technological, political, and social transformations. In March 2024, the Lancet Commission on Health and Human Rights published a critical report, calling for an adaption of the fundamental principles of human rights to the 21st century, referring to numerous shortcomings in current frameworks (Beyrer et al., 2024). First, from a political perspective, human rights enforcement has focused primarily on the relationship between sovereign states and their citizens, whereas health threats increasingly transcend borders (Weber, 2022). Additionally, the power of private actors is growing, while their actions are not always covered by human rights law. This accountability gap allows private entities, such as multinational corporations (e.g. Google and Amazon) and private healthcare providers, to influence the health sector—for example by introducing disruptive health technologies—without corresponding obligations to uphold patients’ rights (Hermes et al., 2020). At the same time, efforts to place human rights at the centre of health should not focus solely on the healthcare sector, because health systems are ultimately shaped by political decisions at the state level. It is therefore essential to extend the scope of patients’ rights beyond the traditional patient-health professional relationship and include both public and private actors.

Furthermore, from a social perspective, in response to the wider emergence of feminist legal theory in human rights law scholarship, more recent scholarship calls for an intersectional approach to health and human rights. Instead of studying the social determinants of health in ‘silos’ (e.g. the effects of race on health outcomes or the effects of gender on health outcomes), it is argued that health is determined by multi-factor and multi-level intersecting individual characteristics (Kapilashrami & Aziz, 2023). Various individual characteristics can create varying discrimination for certain individuals or population groups. A narrow interpretation of discrimination neglects the existence of multiple systems of oppression intersecting (Crenshaw, 1989). This understanding is especially important in the context of health law and policy research, as insight into the differential impact of health policy on often highly heterogeneous population groups is necessary to develop appropriate health responses without forging unfair health outcomes (Hefti, 2024; Hefti et al., 2024). To illustrate, treating a black woman only as a “woman” or only as “black”, ignores the varied health disparities experienced by people with both characteristics (Crenshaw, 1989). For example, a study in Germany showed that women with low income who immigrated are more likely to experience severe somatic symptoms (Barbek et al., 2024). A new patients’ rights charter should thus advocate an intersectional approach.

Finally, from a technological perspective, rapid technological developments call for a novel approach to the protection of human rights in relation to health. As explained in Sect. 2.2, current patients’ rights are undermined by the increasing use of AI, for instance when AI exhibits new forms of discrimination not directly protected by law, and when contextual biases limit access to healthcare for certain individuals, for example, due to limited digital skills or restricted financial resources. The Lancet Commission on Health and Human Rights has also raised concerns about the implications of the emerging use of AI for human rights and health, such as the implications of the capacity of algorithms to deanonymise supposedly anonymous health data; the misuse of biometric data by governments; and increasing discrimination caused by biases affecting health outcomes of marginalised groups (Beyrer et al., 2024). They conclude that “governments should ensure that existing privacy legislation, regulation, and impact monitoring keeps pace with advances in artificial intelligence”, thereby underlining “the need for these measures to be underpinned by a strong human rights framework” (Beyrer et al., 2024).

In other words, recent societal developments—including the integration of AI in healthcare—necessitate the enhancement of existing patients’ rights to ensure they remain effective—or the adoption of new patients’ rights (von der Decken & Koch, 2020).

3.2 The Quest for Novel Human Rights

The call for the codification of patients’ rights in response to major technological, political, and social transformations, should be placed within the context of the broader quest for ‘novel’ human rights. The classic human rights instruments, such as the European Convention on Human Rights (ECHR), date back to the 1950s and thus did not incorporate issues related to the digital society. Over the past few decades, new human rights have emerged to address evolving social, political, and technological challenges. While some human rights have developed in the context of the ‘living instrument doctrine’—the approach taken by the European Court of Human Rights (ECtHR) to the evolutive interpretation of the rights enshrined in the ECHR in light of present-day circumstances,Footnote22 other human rights have been codified as ‘new’ human rights.Footnote23 Worldwide, human rights activists, non-governmental organisations, and scholars have advocated for legal recognition of specific new human rights (von der Decken & Koch, 2020), for example in relation to rights of “vulnerable” groups such as women’s rights,Footnote24 and children’s rights.Footnote25

In the 2000s, the ‘digital rights movement’ emerged in response to the development of new types of technologies (Murphy, 2009), for example in the field of gene editing and internet access (Bertolaso & Marcos, 2022; Pollicino, 2022). In the last years, there has been a surge in AI rights. Advocacy statements, such as the 2017 proposal by ARTICLE 19 for a ‘Universal Declaration of Digital Rights,’ emphasise the urgent need for a cohesive global approach to digital and AI-related human rights (#InternetOfRights, 2017). Instruments such as the Declaration on European Digital Rights and Principles (European Commission) and the AI Convention on Human Rights (Council of Europe) provide foundational frameworks for these emerging rights. Most recently, there has been a call for the adoption of ‘neurorights’ (Bublitz, 2022; Ienca & Andorno, 2017; Nawrot et al., 2024): new human rights specific to the challenges posed by neurotechnology: “the field of devices and procedures used to access, monitor, investigate, assess, manipulate, and/or emulate the structure and function of the neural systems of animals or human beings” (UNESCO, 2023). In response, UNESCO is currently drafting an international instrument on the protection of neurorights (UNESCO, 2024).

However, none of these instruments are specific to the context of healthcare and thus provide direct protection to the rights of patients. While the core patients’ rights are generally protected under human rights law, it is important to articulate specific rights for patients and contribute to the visualisation of harm occurring in this context. In this way, States’ responsibilities towards protection are promoted, and national courts and human rights bodies can apply the rights of patients to specific contexts. As concluded before in Sect. 2.2, current patients’ rights do not specifically protect against the increasing use of AI. This seems to leave a gap in the protection of individuals in the healthcare sector (van Kolfschooten, 2022a). In this light, it may be necessary to add new patients’ rights to modernise the current catalogue of European patients’ rights.

3.3 Time for New Patients’ Rights?

As explained in Sect. 2.2 above, it is possible to deduct fourteen ‘core’ patients’ rights clustered in five categories across all EU Member States from various supranational instruments, human rights legislation and accompanying case law, and ‘soft law’ instruments such as professional guidelines. However, as shown before, these rights do not necessarily protect patients from the new challenges arising from AI systems—at least in their traditional interpretation. Upon analysing the key scholarship on new digital rights (Custers, 2022; Hertz, 2022; Ienca & Andorno, 2017; Nawrot et al., 2024; von Arnauld et al., 2020), five rights were identified that have been previously proposed in the literature in relation to other areas that could qualify as new patients’ rights:Footnote26 (1) The right not to be subject to automated medical decision-making; (2) The right to meaningful human contact; (3) The right to mental privacy; (4) The right to digital health literacy; (5) A right to access new healthcare technologies. These rights respond to common threats, are based on important social values, and are capable of achieving consensus in the EU (Alston, 1984).Footnote27 While some of these rights should be considered individual rights—and can be exercised only individually—others should be seen as collective rights. For example, given the collective interest it serves, the right to digital health literacy is a collective entitlement to all members of the patient community. This means that a violation affects all members of the group. The right to access new health technologies has both an individual—access to technology X in the treatment of patient Y—and a collective component, e.g. inclusion of technology Z in the basic health insurance of all patients. The same could apply to the right to mental privacy, because apart from individual privacy harms, the large training datasets for AI allow for group profiling of a group of patients sharing similar characteristics.Footnote28

3.3.1 The Right Not to Be Subject to Automated Medical Decision-Making

Ethicists have argued that a right to not be subject to automated medical decision-making could contribute to the protection of health privacy as protected in Article 7 of the EU Charter of Fundamental Rights (CFR) (Ploug & Holm, 2020a, b). Such a right entails that—under certain circumstances—patients should have the right to refuse medical procedures based on decisions taken with the aid of assisting AI (e.g. diagnostics or treatment selection) and from the use of partially and fully automated decision-making (e.g. AI cardiac monitoring or precision medicines) as part of their individual medical treatment while having access to a non- or less-automated alternative treatment (Ploug & Holm, 2020a, b). The patients’ right not to be subject to automated medical decision-making ensures human involvement in medical care. As AI becomes more integrated into healthcare, decisions made by algorithms can lack the nuance and empathy that human professionals provide (Montemayor et al., 2022). This right protects patients from potential errors, biases, and dehumanisation associated with AI systems. It emphasises the need for human judgment in critical health decisions, safeguarding patient autonomy, personalised care, and the ethical use of AI in medicine. Such a right is currently absent in the EU patients’ rights framework, although a health-conformant reading of Article 22 GDPR could provide patients with an enforceable right in this respect (van Kolfschooten, 2024b).

3.3.2 The Right to Meaningful Human Contact

The Rathenau Institute also suggested the adoption of the right to meaningful human contact in relation to the notion of human dignity—the basis of human rights enshrined in the CFR. It encompasses the need for genuine interpersonal interactions, which is crucial for emotional and social well-being (Rathenau Instituut, 2017). This could be relevant to include as a patients’ right in the Charter. The right to meaningful human contact is frequently brought up in debates about using social or care robots in assisted living and elderly care situations (Laitinen et al., 2016) solitary confinement in prisons (Naylor & Shalev, 2022), and isolation of patients in psychiatric hospitals (Brown et al., 2020) Human contact is one of the fundamental aspects of healthcare. There is a widespread fear that the growing use of (AI) technology will eventually replace human contact and increase loneliness among vulnerable groups (European Parliament, 2017). As AI is becoming more common in healthcare—and may perhaps reach the extent of full automation in some areas—recognising this right as a patients’ right is increasingly important. It could be recognised as part of the right to respect for private life in Articles 7 CFR and 8 ECHR.

3.3.3 The Right to Mental Privacy

Scholars in the field of ‘neurorights’ have been advocating a right to mental privacy as extension of Articles 7 and 8 CFR: a right to control their own neural or brain data (Andorno & Lavazza, 2023; Ienca & Andorno, 2017; Ligthart et al., 2022). In the context of healthcare, brain data has been used for a long time for diagnostic purposes (for example with EEG systems). Recent developments in technology allow for increasing collection, processing, and use of brain data (potentially ‘mind reading’) and direct interaction with neural processes (potentially ‘brain alteration’), which could lead to intrusion of the personal sphere, potentially compromising the freedom of thought (Shen, 2013). While there is thorough legal protection for other types of information about the body, brain information as such is currently not considered personal data, thus excluding it from the sphere of privacy and data protection rights (Ienca & Andorno, 2017). Encompassing a patients’ right to mental privacy in healthcare is critical for protecting patients’ thoughts, emotions, and cognitive processes from intrusive technologies. With the risk of AI analysing mental states, there’s a risk of unauthorised access to intimate mental data, potentially leading to misuse, discrimination, or manipulation. Safeguarding mental privacy as part of the rights to respect for private life and personal data protection ensures that patients retain control over their cognitive space, fostering trust in healthcare systems and preventing psychological harm (López-Silva et al., 2024).

3.3.4 The Right to Digital Health Literacy

The right to digital health literacy ensures individuals have the knowledge and skills to understand explanations of the functioning and instructions on how to engage with digital health technologies, including AI. This right is crucial as healthcare increasingly relies on digital tools for diagnosis, treatment, and management. Patients need to comprehend how these technologies work, their benefits, and potential risks to make informed decisions about their health. Promoting digital health literacy fosters trust, enhances patient autonomy, and ensures equitable access to healthcare, preventing disparities in the use of advanced medical technologies. It has been described as a “super social determinant of health” (Kickbusch et al., 2021). The AI transformation of the healthcare sector urges the need for patients to develop a high level of AI health literacy. AI health literacy is of paramount importance to establish patients’ trust in healthcare professionals and the healthcare system when AI medical decision-making becomes the standard (Tsai et al., 2018). AI health literacy must not be seen as the responsibility of the patient, but rather be regarded as a key enabler of patients’ rights protection, therefore demanding adequate safeguarding in the EU legal order. The other side of the coin is that healthcare professionals should have the obligation to have a sufficient level of AI literacy when deploying AI systems.Footnote29 The patients’ right to digital health literacy could be acknowledged in relation to the right to informed consent (Ali et al., 2024).

3.3.5 The Right to Access New Healthcare Technologies

In light of the emerging development of technology for medical treatment, including AI systems, the right to access to healthcare could encompass a patients’ right to access new healthcare technologies. Generally, the right to access to healthcare is not interpreted as a right to a specific treatment, but rather as an obligation to ensure access to essential medicines and equitable distribution of health goods.Footnote30 Patients have a right to access to healthcare which is available to the population generally.Footnote31 Moreover, States do have an obligation to the progressive realisation of the right to health, meaning they need to invest in improving healthcare (Ó Cathaoir, 2022).Footnote32 While the adoption of a right to access new healthcare technologies would not include a right to access to a specific technology tool—also because this would be dependent on financial resources (Calcoen et al., n.d.), it does entitle patients to the enjoyment of the benefits of the healthcare technologies that are generally available for the wider population. In this sense, it is comparable to Article 15 of the International Covenant on Economic, Social and Cultural Rights (ICESCR), which protects “the right of everyone to enjoy the benefits of scientific progress and its applications” (Cathaoir, 2022).

4 Protecting Patients’ Rights at the EU Level

4.1 Limited Competencies, Yet Significant Impact on Healthcare

While the EU holds a key position in the regulation of the internal market, therefore facilitating the availability of medical AI technologies in the EU market, it does not provide specific rights for the patients subjected to AI. Indeed, a consolidated EU framework for the protection of the rights of patients does not yet exist. Patients’ rights protection in EU Member States is characterised by a patchwork of laws in a multi-level legal framework. The codification of patients’ rights is not merely a symbolic but a practical tool for improving healthcare systems, protecting human dignity, and ensuring equitable treatment of patients because of its role in education and awareness, the impact on national policies, the advantages for identifying violations, and patient empowerment (Cohen & Ezer, 2013).

It is often argued that the EU’s possibilities to make legislation in the area of healthcare are limited, as the organization and delivery of health services and medical care is the primary responsibility of the Member States, and harmonisation is constitutionally restricted (Greer et al., 2022). In light of the principles of conferral and subsidiarity, the EU’s power in healthcare is limited to taking supporting measures (Greer et al., 2022; Sauter & Guy, 2017). The Treaty on the Functioning of the European Union (TFEU) does confer some explicit competencies in the realm of health upon the EU in Article 168, such as measures setting high standards of quality and safety for medicinal products and devices for medical use. However, because of its competencies concerning the regulation of the internal market, the body of EU health law and policy deriving from internal market legislation is growing (Purnhagen et al., 2020). The principle of free movement of goods allows for health products and services to freely circulate in the EU. With European cooperation in cross-border healthcare growing, concomitant EU rules on health products and services, intended to promote and protect the internal market, are increasing (Abbing, 2010). In the Tobacco Advertising-case, the Court of Justice of the EU (CJEU) ruled that, while the EU’s harmonizing measures may constitute the side effect of protecting human health, it is not allowed to create regulation on the basis of other Treaty articles with the sole objective of health protection. Therefore, in practice, EU health law is often a by-product of internal market regulation on the basis of Article 114 TFEU (de Ruijter, 2016). This development shows that EU health law and policy are mainly grounded in economic considerations and do not specifically consider health or patients (Greer et al., 2022).

Indeed, many EU (health) laws and policies made on the basis of the internal market competence influence patients’ rights (Nys & Goffin, 2011). Through the regulation of goods, services, and people related to healthcare—such as AI systems,Footnote33 personal data,Footnote34 medical devices and medicines,Footnote35 the EU already enters the realm of health law and patients’ rights. Indeed, while the EU has limited direct power in shaping health policies in the Member States due to a lack of competencies, it does co-create effects for patients’ rights through broader legislation and policies, including the regulation of AI systems. By designing the conditions for AI systems to enter the EU market, the EU also determines the extent to which patients may encounter the potential harm to their health or rights exhibited by these AI systems (van Kolfschooten, 2022a; van Kolfschooten & Shachar, 2023). From this point of view, it could be argued that the EU should, by allowing these risks, also provide concomitant protection for patients’ rights within the scope of EU law. The EU’s responsibilities for the protection of human health and human rights may even demand such protection (de Witte, 2006).

4.2 EU Responsibilities for Health Mainstreaming and Protecting Human Rights

While all EU Member States do have protections in place for the rights of patients (Townend et al., 2018), at the EU level there is currently no corresponding instrument that provides uniform recognition of the rights of patients. In light of the expanding impact of the EU on healthcare, this constitutes a gap in protection for European patients when applying and interpreting EU law. One of the key objectives of the EU is to “promote the well-being of its peoples” (Article 3 TEU). This objective is accompanied by a so-called “health mainstreaming” obligation. “A high level of human health protection shall be ensured in the definition and implementation of all Union policies and activities” (Articles 9 and 168(1) TFEU; Article 35 CFR). When adopting legislation to harmonise the internal market, the European Commission needs to take as a base a high level of protection of human health (Article 114(3) TFEU). This means that, while the competency of the EU to adopt legislation directly determining national health policies is restricted, the EU does have to safeguard human health in its legislation applicable in other areas, including internal market legislation on health technology (Hervey & McHale, 2015). The EU’s commitment to health mainstreaming shows its awareness of the potential (undesired or unexpected) effects of its laws and policies on health. Technically, the provision on health mainstreaming could be relied on to invalidate EU legislation that does not provide a sufficiently high level of protection. This however seems difficult to enforce in practice (Bartlett, 2024).

Second, the EU has obligations toward the protection of human rights—also those related to healthcare. Respect for human rights is among the EU’s founding values (Article 2 TEU). To a certain extent, the intrinsic connection between health and human rights—advocated in the ‘health and human rights’ scholarship (Gostin, 2020; Mann et al., 1999; McHale & Hervey, 2015; Murphy, 2013; Toebes et al., 2022)—is recognised in the EU legal order (Herrmann et al., 2022). For instance, the importance of health in relation to human rights is also enshrined in Article 35 CFR, protecting the right to “access to preventive health care and the right to benefit from medical treatment under the conditions established by national laws and practices”. It is recognised in the CFR that EU law and policy that serve the interest of health protection may under certain circumstances limit other human rights. Also, for example, the framework on anti-tobacco legislation and restrictions of the fundamental principles of the internal market, shows how health-related law and policy (e.g. health warnings on tobacco packaging) can sometimes limit other rights and interests (e.g. free movement of goods) (Delhomme, 2017).

Moreover, in light of increasing regulatory complexity due to (1) the expansion of EU action in an increasing number of areas (indirectly) affecting national health laws and policies, (2) a growing number of international treaties governing health rights to which all EU Member States are parties,Footnote36 and (3) growing digitalization creating new questions and challenges for protecting patients’ rights (Legemaate, 2024), the EU is the most obvious choice for locating a new patients’ rights instrument. Exhaustive harmonisation of a specific policy area prevents Member States from adopting national measures deviating from EU legislation.Footnote37 The AI Act aims to harmonise the entire area of AI systems (“the placing on the market, the putting into service, and the use of AI systems”).Footnote38 This means that, generally, Member States cannot deny market access to products that meet the requirements of the AI Act or stipulate additional rules that may frustrate the internal market.Footnote39 While the AI Act does have the objective to protect human rights, especially with regard to ‘high-risk’ AI systems, these rules are insufficient to protect the rights of patients for multiple reasons. First, the instrument applies across all sectors, thereby disregarding the specific issues in healthcare. Second, it focuses on the safety and quality of the product rather than on rights for individuals (van Kolfschooten, 2022a). Finally, it contains many exceptions precisely for medical devices using AI (van Kolfschooten & van Oirschot, 2024). Thus, while the EU Member States have the primary responsibility for healthcare and health policy,Footnote40 due to the approach of maximum harmonisation to AI systems and other (health) technologies,Footnote41 the EU seems to be better positioned to adopt legislation to protect the rights of patients in relation to those measures.

4.3 Towards a Uniform EU Approach to Patients’ Rights Protection

The increasing use of AI in healthcare exacerbates the need for a unified legal approach to patients’ rights within the EU in the form of an EU Charter for Digital Patients’ Rights. The consolidation and modernisation of the catalogue of European patients’ rights in one EU legal instrument has multiple advantages. First, a codified framework would empower patients by clearly defining their rights, enabling them to advocate more effectively and seek legal redress when necessary. This is necessary to protect patients against the undesirable actions of health professionals when deploying AI in healthcare in an inherently asymmetric relationship. Codification ensures that both patients and healthcare professionals understand their rights and obligations, allowing for more consistent interpretation, potentially preventing disputes, and strengthening judicial oversight (London, 2008). Second, codifying patients’ rights can improve public health outcomes by ensuring that patients have consistent access to high-quality healthcare and that their rights are protected, thereby improving overall trust in the healthcare system (Bærøe et al., 2020). Third, it would align the interpretation of patients’ rights throughout EU Member States, ensuring equal rights and protections, and reducing disparities across the EU. This could lead to uniform enforcement mechanisms across the EU, providing more effective protection and redress for patients whose rights are violated. Finally, a codified set of patients’ rights simplifies the current complex and fragmented legal landscape when AI infringes on rights, providing a clear and consistent legal framework that is easier to navigate. This is warranted by the sensitive nature of the patient-health professional relationship and the patient’s position of vulnerability and dependency when in need of healthcare.

New rights could extend protection to individual patients and patient groups, that were previously unrecognised under existing human rights frameworks, which allows for a better response to contemporary issues in healthcare. Legal recognition of patients’ rights provides a uniform, clear, and effective framework, leading to better implementation and accountability. An EU Charter for Digital Patients’ Rights is a logical step in light of political, social, and technological developments (Beyrer et al., 2024). Such an initiative would align with recent efforts to intensify the ‘European Health Union’—creating more collaboration in the field of health in reaction to the Covid-19 pandemic—and the wish to protect human rights in relation to AI (Alemanno, 2020). The next Section proposes the blueprint of such an instrument.

5 Proposal for an EU Charter of Digital Patients’ Rights

5.1 Reasons for and Objectives of the Proposal

Major technological, political, and social transformations of society affect the human rights of individuals in Europe. Generally, the intensifying cross-border character of healthcare due to increasing international pandemics, the integration of non-state private actors such as multinational technology companies, and the increasing online, non-territory bound character of healthcare, necessitates additional EU action in this field. Especially the increasing algorithmisation of healthcare—while introducing considerable opportunities to improve the accessibility, efficiency, and quality of healthcare—presents significant challenges to the rights of patients. The responsibilities the EU has towards the protection of human rights (Article 2 TEU), in combination with the ongoing expansion of EU policies directly influencing the rights of patients,Footnote42 call for a stronger protection of patients’ rights in the scope of EU law.

Depending on the exact content the Charter, the legal basis for this proposal could be Articles 114, 168, 16 and 19 TFEU. Article 114 TFEU concerns the internal market and allows for the adoption of measures necessary for the establishment or functioning of the internal market, whilst at the same time ensuring a high level of public health protection as required in Article 114(3) and 168(1) TFEU, and fully respecting the responsibilities of the Member States to organise, finance and deliver health services and medical care. Article 16 TFEU allows for the adoption of rules for the protection of individuals with regard to the processing of personal data and Article 19 TFEU allows for measures to combat discrimination. The underlying idea is that, when taking measures to protect the internal market, the EU ought to protect the health—and rights—of patients too.

The proposal concerns the protection of the rights of patients and is an important building block of the European Health Union (European Commission, 2024). It sets out the rights and freedoms individuals are entitled to in the context of healthcare technologies. It aims to address the fragmented approach to the protection of the rights of patients in Europe, caused partially by the complex regulatory framework of EU secondary law related to healthcare and health-related products, and exacerbated by new technological, political, and social developments. The EU Charter of Digital Patients’ Rights responds to these developments. It departs from the understanding that patients’ rights have their basis in human rights and thus warrant thorough protection. It recognises that various individual characteristics can create varying discrimination for certain individuals or population groups, and therefore emphasises the need for an intersectional approach to the rights enshrined in this Charter.

Against this background, the objective of this proposal is twofold. First, it aims to clarify how EU human rights and values should be applied in the context of healthcare. Second, it translates these human rights and values to apply in the new digital reality. In doing so, it builds on the EU Charter of Fundamental Rights and the case law of the Court of Justice of the EU and the European Court of Human Rights. The ECtHR’s case law is relevant because of Article 52(3) TFEU: the meaning and scope of the rights in the CFR are the same as the corresponding rights in the ECHR. Explicating the rights of patients strengthens protection by making those rights more visible in one Charter. Ultimately, it promotes the rights enshrined in the Charter in order to strengthen the position of patients and guide relevant stakeholders in the implementation (e.g. States; individual healthcare professionals; hospitals; and technology developers). The Charter sets out in a single text all the rights of patients receiving healthcare in the EU.

5.2 Proposed Scope of Application

The Charter protects the rights of European patients in relation to the areas subjected to EU harmonisation of health, such as health technology. The rights and entitlements in the Charter shall apply to individuals receiving healthcare, or seeking to receive healthcare in an EU Member State, regardless of how it is organised, delivered and financed. With ‘healthcare’ is meant “health services provided by health professionals to patients to assess, maintain or restore their state of health, including the prescription, dispensation and provision of medicinal products and medical devices”.Footnote43 Because many of the rights enshrined in the proposed Charter have their basis in the CFR, it is logical to follow the same scope of application. The Charter applies to the institutions, bodies, offices and agencies of the Union and to the Member States only when they are implementing Union law.Footnote44 Because of the expanding EU action in areas related to health and healthcare, such as (AI) medical devices, personal (health) data, and clinical trials, this scope is broad enough to cover the main challenges for patients’ rights.

While Article 168(7) TFEU refrains the EU from determining national health policies and laws, and EU patients’ rights could thus only be applied within the scope of the implementation of EU law, they could still have a de facto impact above and beyond EU law by influencing EU Member States to implement these rights in other areas.

5.3 Legal Status and Choice of the Instrument

Human rights scholars have recognised numerous pathways to the adoption of new human rights—in this case, patients’ rights. In the derivation approach, new human rights can be derived from existing rights through interpretation, for example through the living instrument doctrine by courts to address new situations that fall within the scope of existing rights. In the treaty approach, new human rights are established through the adoption of new treaties or optional protocols to existing treaties. Another pathway is the soft law approach, where new human rights are first mentioned in non-binding resolutions and declarations by international organisations. Over time, these soft law instruments can pave the way for formal recognition and the drafting of binding treaties (von der Decken & Koch, 2020).

The EU Charter of Fundamental Rights has the form of an EU Treaty, signed and ratified by all EU Member States. This proposal does not advocate for a treaty revision or adoption of an additional protocol: amendment of the CFR would require unanimous approval of all Member States and is thus a politically complicated and time-consuming procedure. The EU can act through enumerated legal instruments: Directives, Decisions, Regulations, and Recommendations and Opinions (Article 288 TFEU). Directives and Decisions are not desirable because of the relative freedom they provide for implementation by Member States potentially leading to even more fragmentation. The form of the Regulation seems to be the best option in the long term—given the need for a regulatory framework that directly addresses the rights of patients and reduces fragmentation and inconsistency in the digital single market of healthcare.

However, in light of the current political climate in Europe, for now, this proposal could be adopted in the form of a Recommendation, for example by the Council of the European Union. The Council Recommendation does not constitute a binding act, but it sets certain standards that EU Member States are expected to oblige. It thus counts on the moral authority of the EU. In this way, this proposal takes the soft law approach, by first listing the rights of patients in a non-binding instrument. Over time, this soft law instrument can pave the way for formal recognition and the drafting of binding legislation, such as a Regulation (von der Decken & Koch, 2020). In the meantime, stakeholders can use the Charter to guide their national policies. Because of the embedding of the proposed patients’ rights in current human rights law, national courts and the CJEU can use the derivation approach and treat the Charter as an interpretative tool. A shortcoming of the Council Recommendation is the lack of democratic underpinning by the European Parliament. Another option could thus be to adopt a DeclarationFootnote45—an instrument that is becoming more popular in the ‘digital sphere’. However, this instrument has no binding power, does not introduce new obligations or entitlements, and should merely be seen as statements of intention for future EU strategies. At the same time, the fact that Declarations are signed by (often multiple) key institutions of the EU contributes to their value (Cocito & De Hert, 2023).

5.4 Proposed Catalogue of European Patients’ Rights

See Table 1.Table 1 Catalogue of patients’ rights

5.5 Considerations for Implementation

While codification has clear advantages for the protection of patients’ rights, it is also important to consider the steps following the adoption of a new Charter: the implementation phase. National implementation is essential to supporting new rights (Bob, 2011). For example, the neurorights movement has been critiqued for having too little attention for the implementation. Some scholars have expressed concerns about the practical implications, suggesting the need for precise laws over new rights (Ligthart et al., 2023). Others found that enforcing existing rights is more important than creating new ones (Moreu Carbonell, 2021), or even warned of the devaluation of existing rights (Bublitz, 2022). Some argued that neurorights are legally unnecessary because existing frameworks already protect essential rights and freedoms such as the freedom of thought, consent, equality, and privacy (Borbón & Borbón, 2021). Similar critiques could be applied to (digital) patients’ rights.

Indeed, mere formal recognition of such rights is only part of the solution. The codification in a legal instrument is not enough to effectively guarantee the protection of patients and thus cannot stand on its own. In parallel to the adoption of a Charter, it is therefore important to take concrete legal measures to further regulate the boundaries of AI technologies, as well as establish legal mechanisms for judicial protection of these rights, for example by adopting sector-specific rules for the marketing, development, and use of AI systems in healthcare in the context of the AI Act.

It is also worth noting that, naturally, this Charter would not exist in a legal vacuum, but instead enter a highly regulated scene, with many existing product regulations at the level of the EU, but also national laws and policies on medical professional conduct. Nevertheless, a Charter would provide welcome guidance on how to involve patients in a quickly changing medical landscape. In addition to this, it could be helpful to develop guidelines and tools for healthcare professionals on how to protect patients’ rights when deploying AI technology in healthcare, such as in the choice of a specific AI system, for example in the form of a practical patients’ rights assessment instrument (van Kolfschooten, 2024a).

6 Concluding Remarks

The integration of AI in healthcare, while promising, introduces significant risks related to the health and rights of patients. The advancement of AI technologies in healthcare necessitates a balanced approach to integrating these innovations while safeguarding patients’ rights. The proposed EU Charter for Digital Patients’ Rights addresses the pressing need for a unified framework to protect patients in the age of AI. This Charter codifies existing rights and advocates for essential new rights. By harmonizing these rights across EU Member States, the Charter aims to mitigate health inequities and ensure that technological advancements enhance rather than undermine patient care. The implementation of such a Charter, coupled with effective regulatory mechanisms and guidelines for healthcare professionals, is crucial for maintaining trust in the evolving medical landscape. These efforts collectively aim to ensure that the integration of digital health innovation in healthcare enhances patient care without compromising human rights.

Data Availability

N/A.

Notes

- The annual private investment in AI technology in the healthcare sector increased by 108,15% between 2017 (€314.48 million) and 2022 (€654.73 million), see: ‘Annual private investment in artificial intelligence, by focus area’, NetBase Quid via AI Index Report (2023) via <http://ourworldindata.org> accessed 15 April 2024. NB: this does not include public investments.

- An example is the violation of the right to personal data protection.

- See for example the European Convention on Human Rights (ECHR). Also see Nys (2001).

- See for example: Directive 2011/24/EU of the European Parliament and of the Council of 9 March 2011 on the application of patients’ rights in cross-border healthcare.

- At the supranational level, there is the World Health Organization (WHO) Declaration on the Promotion of the Rights of the Patient in Europe (1994), and declarations by private actors such as the Active Citizens Network European Charter of Patients’ Rights (2002) and the World Health Assembly (WHA) Declaration of Lisbon on the Rights of the Patient (2005).

- NB: the only individual right—Article 85 Artificial Intelligence Act (EU AI Act)—is merely a procedural right and does not apply to AI medical devices. Also see van Kolfschooten (2023).

- NB: the Court of Justice of the EU (CJEU) uses a broad interpretation of “public”, which may mean that in EU Member States most of the health-related services in the public sector would be covered, see for example: CJEU C-168/18, Pensions-Sicherungs-Verein, para. 48).

- See on this concept: Postigo (2012).

- See on the medical liability (for physical harm) by AI-powered diagnostic systems in the Netherlands: Van Kolfschooten & Wijne, 2024.

- The AI Incident Database is a project of the Responsible AI Collaborative. Anyone can submit incidents, see: <http://incidentdatabase.ai/>.

- The WHO has marketed its chatbot worldwide see <https://www.who.int/news/item/02-04-2024-who-unveils-a-digital-health-promoter-harnessing-generative-ai-for-public-health>.

- See for further elaboration on the ‘AI cycle of health inequity’: van Kolfschooten (2023). Also see on “cascading effects” of medical AI: Leslie et al. (2021).

- For example: Directive 2011/24/EU on the application of patients’ rights in cross-border healthcare.

- For example: the ECHR and Convention for the Protection of Human Rights and Dignity of the Human Being with regard to the Application of Biology and Medicine: Convention on Human Rights and Biomedicine (ETS No. 164) 04/04/1997. NB: the EU also recognizes the importance of the Council of Europe in Article 6 TEU.

- World Health Organization. (2016). International Health Regulations (2005), 3rd ed. World Health Organization.

- This is confirmed by a mapping exercise of patients’ rights in 30 countries in Europe, conducted January to September 2015, see: (Townend et al., 2018).

- Adopted by the 34th WMA, Lisbon, Portugal, September/October 1981 and amended by the 47th WMA General Assembly, Bali, Indonesia, September 1995 and editorially revised by the 171st WMA Council Session, Santiago, Chile, October 2005 and reaffirmed by the 200th WMA Council Session, Oslo, Norway, April 2015.

- Active Citizenship Network, ‘European Charter of Patients’ Rights’, Basis Document, Rome, November 2002.

- WHO, Regional Office for Europe, ‘The Rights of Patients. Report on a WHO Consultation, Amsterdam, 28–30 March 1994’, in: WHO Regional Office for Europe, in collaboration with the Health Law Section, University of Amsterdam, Promotion of the Rights of Patients in Europe, London: Kluwer Law International 1995, 13–19.

- E.g. the right to public health, medical care, social security and social services in Article 5(d) of the International Convention on the Elimination of all forms of Racial Discrimination (1965).

- E.g. the right to non-discrimination with regard to nationality when seeking healthcare in other Member State in Article 4(3) of Directive 2011/24/EU of the European Parliament and of the Council of 9 March 2011 on the application of patients’ rights in cross-border healthcare.

- First adopted in Tyrer v. the United Kingdom App no 58/56/72 (ECtHR, 25 April 1978) para 31.

- For example, the human right to a clean, healthy, and sustainable environment by the UN. See: Bob (2011).

- Codified by the United Nations (UN) in: Convention on the Elimination of All Forms of Discrimination against Women (adopted 18 December 1979, entered into force 3 September 1981) 1249 UNTS 1.

- Codified by the UN in: Convention on the Rights of the Child (adopted 20 November 1989, entered into force 2 September 1990) 1577 UNTS 3.

- Such as AI rights; neurorights; internet rights.

- In accordance with the criteria for new international human rights as argued in: (Alston, 1984).

- For an extensive explanation of the difference between individual and collective rights, see: (Chalabi, 2023) See on privacy as a collective right: (Puri, 2023).

- This is protected in Article 4 of the AI Act. However, this provision addresses “providers and deployers” of AI systems, so only applies to the State when deploying AI.

- See Asiye Genç v. Turkey App no 24109/07 (European Court of Human Rights (ECtHR), 27 January 2015) para 82.

- See Nitecki v. Poland App no 65653/01 (ECtHR, 21 March 2002) para 4.

- Also see Report of the Special Rapporteur on the right of everyone to the enjoyment of the highest attainable standard of physical and mental health, A/HRC/29/33, UN Human Rights Council, 2 April 2015, para 19–22.

- In the EU AI Act.

- For example, through the General Data Protection Regulation (GDPR), the European Health Data Space, and the ePrivacy regulation.

- In the Medical Devices Regulations and legislation on clinical trials and pharmaceutical products.

- E.g. the UN Convention on the Rights of the Child (CRC) and the UN Convention on the Rights of Persons with Disabilities (CRPD). The EU ratified the CRPD in 2011.

- See Article 114 Treaty on the Functioning of the EU (TFEU) and see, for example, Court of Justice of the European Union (CJEU), L.E.G.O (C-242/17L.E.G.O) ECLI:EU:C:2018:804, 4 October 2018, para 52.

- See Article 1(2)(a) EU AI Act.

- NB: Member States can only adopt legislation and policy posing barriers to the internal market to protect human health if this is justified, see Articles 49 and 56 TFEU. Also see: Veale & Borgesius, 2021.

- Article 168(7) TFEU.

- Also see the Medical Devices Regulation.

- For example on topics such as pharmaceutical products; medical devices; clinical trials; serious cross-border threats to health; patients’ rights in cross-border healthcare; AI systems; personal data; health data.

- Definition of healthcare used in Directive 2011/24/EU of the European Parliament and of the Council of 9 March 2011 on the application of patients’ rights in cross-border healthcare (Article 3(a)).

- See Article 51(1) Charter of Fundamental Rights of the European Union (CFR).

- NB: the EU institutions can issue a Declaration to convey their point of view. This is however not an official legislative instrument.

References

- Abbing, H. D. C. R. (2010). Patients’ Rights in a Technology and Market Driven-Europe. European Journal of Health Law, 17(1), 11–22. https://doi.org/10.1163/157180909X12604572349566Article MATH Google Scholar

- Agarwal, R., Bjarnadottir, M., Rhue, L., Dugas, M., Crowley, K., Clark, J., & Gao, G. (2022). Addressing algorithmic bias and the perpetuation of health inequities: An AI bias aware framework. Health Policy and Technology, 12, 100702. https://doi.org/10.1016/j.hlpt.2022.100702Article Google Scholar

- Ahmed, M. I., Spooner, B., Isherwood, J., Lane, M., Orrock, E., & Dennison, A. (n.d.). A systematic review of the barriers to the implementation of artificial intelligence in healthcare. Cureus, 15(10), e46454. https://doi.org/10.7759/cureus.46454

- Alemanno, A. (2020). Towards a European Health Union: Time to level up. European Journal of Risk Regulation, 11(4), 721–725. https://doi.org/10.1017/err.2020.106Article MATH Google Scholar

- Ali, R., Connolly, I. D., Tang, O. Y., Mirza, F. N., Johnston, B., Abdulrazeq, H. F., Lim, R. K., Galamaga, P. F., Libby, T. J., Sodha, N. R., Groff, M. W., Gokaslan, Z. L., Telfeian, A. E., Shin, J. H., Asaad, W. F., Zou, J., & Doberstein, C. E. (2024). Bridging the literacy gap for surgical consents: An AI-human expert collaborative approach. Npj Digital Medicine, 7(1), 1–6. https://doi.org/10.1038/s41746-024-01039-2Article Google Scholar

- Alston, P. (1984). Conjuring up new human rights: A proposal for quality control. The American Journal of International Law, 78(3), 607–621. https://doi.org/10.2307/2202599Article MATH Google Scholar

- Andorno, R., & Lavazza, A. (2023). How to deal with mind-reading technologies. Frontiers in Psychology, 14. https://doi.org/10.3389/fpsyg.2023.1290478

- Bærøe, K., Miyata-Sturm, A., & Henden, E. (2020). How to achieve trustworthy artificial intelligence for health. Bulletin of the World Health Organization, 98(4), 257–262. https://doi.org/10.2471/BLT.19.237289Article Google Scholar

- Barbek, R., Toussaint, A., Löwe, B., & von dem Knesebeck, O. (2024). Intersectional inequalities in somatic symptom severity in the adult population in Germany found within the SOMA.SOC study. Scientific Reports, 14(1), 3820. https://doi.org/10.1038/s41598-024-54042-8Article Google Scholar

- Bartlett, O. (2024). Can EU Law Be Used to Challenge Better Regulation Practices That Do Not Lead to Better Health? European Journal of Health Law, 31, 389–410. https://doi.org/10.1163/15718093-bja10129Article MATH Google Scholar

- Bertolaso, M., & Marcos, A. (2022). Technological changes and rights evolution in the bio-digital era: A philosophical overview. In E. Stefanini, L. Liguori, M. Ienca, O. Pollicino, & R. Andorno (Eds.), The Cambridge handbook of information technology, life sciences and human rights (pp. 261–272). Cambridge University Press. https://doi.org/10.1017/9781108775038.022Chapter MATH Google Scholar

- Beyrer, C., Kamarulzaman, A., Isbell, M., Amon, J., Baral, S., Bassett, M. T., Cepeda, J., Deacon, H., Dean, L., Fan, L., Giacaman, R., Gomes, C., Gruskin, S., Goyal, R., Mon, S. H. H., Jabbour, S., Kazatchkine, M., Kasoka, K., Lyons, C.,… Rubenstein, L. (2024). Under threat: The International AIDS Society–Lancet Commission on Health and Human Rights. The Lancet. https://doi.org/10.1016/S0140-6736(24)00302-7

- Blease, C., Torous, J., McMillan, B., Hägglund, M., & Mandl, K. D. (2024). Generative language models and open notes: Exploring the promise and limitations. JMIR Medical Education, 10, e51183. https://doi.org/10.2196/51183Article Google Scholar

- Blomberg, S. N., Folke, F., Ersbøll, A. K., Christensen, H. C., Torp-Pedersen, C., Sayre, M. R., Counts, C. R., & Lippert, F. K. (2019). Machine learning as a supportive tool to recognize cardiac arrest in emergency calls. Resuscitation, 138, 322–329. https://doi.org/10.1016/j.resuscitation.2019.01.015Article Google Scholar

- Bob, C. (2011). Fighting for new rights. In C. Bob (Ed.), The international struggle for new human rights (pp. 1–13). University of Pennsylvania Press. https://doi.org/10.9783/9780812201345.1Chapter MATH Google Scholar

- Borbón, D., & Borbón, L. (2021). A critical perspective on neurorights: Comments regarding ethics and law. Frontiers in Human Neuroscience, 15, 703121. https://doi.org/10.3389/fnhum.2021.703121Article MATH Google Scholar

- Broken links and bad advice: Conversations with SARAH, WHO’s virtual health aide. (2024, April 23). POLITICO. https://www.politico.eu/article/bad-advice-conversations-with-sarah-world-health-organization-virtual-health-aide/

- Brown, C., Ruck Keene, A., Hooper, C. R., & O’Brien, A. (2020). Isolation of patients in psychiatric hospitals in the context of the COVID-19 pandemic: An ethical, legal, and practical challenge. International Journal of Law and Psychiatry, 71, 101572. https://doi.org/10.1016/j.ijlp.2020.101572Article Google Scholar

- Bublitz, J. C. (2022). Novel neurorights: From nonsense to substance. Neuroethics, 15(1), Article1. https://doi.org/10.1007/s12152-022-09481-3Article Google Scholar

- Calcoen, P., Boer, A., & van de Ven, W. P. M. M. (n.d.). Should new health technology be available only for patients able and willing to pay? Journal of Market Access and Health Policy, 1(5), 1315294. https://doi.org/10.1080/20016689.2017.1315294

- Cathaoir, K. Ó. (2022). Digital healthcare technologies and human rights. In Health and human rights: Global and European perspectives (2nd ed.). Intersentia.Google Scholar

- Celeste, C., Ming, D., Broce, J., Ojo, D. P., Drobina, E., Louis-Jacques, A. F., Gilbert, J. E., Fang, R., & Parker, I. K. (2023). Ethnic disparity in diagnosing asymptomatic bacterial vaginosis using machine learning. Npj Digital Medicine, 6(1), 1–10. https://doi.org/10.1038/s41746-023-00953-1Article Google Scholar

- Chalabi, A. (2023). A new theoretical model of the right to environment and its practical advantages. Human Rights Law Review, 23(4), ngad023. https://doi.org/10.1093/hrlr/ngad023Article MATH Google Scholar

- Cocito, C., & De Hert, P. (2023). The transformative nature of the EU Declaration on Digital Rights and Principles: Replacing the old paradigm (normative equivalency of rights). Computer Law & Security Review, 50, 105846. https://doi.org/10.1016/j.clsr.2023.105846Article MATH Google Scholar

- Cohen, J., & Ezer, T. (2013). Human rights in patient care: A theoretical and practical framework. Health and Human Rights Journal. https://www.hhrjournal.org/2013/12/human-rights-in-patient-care-a-theoretical-and-practical-framework/

- Crenshaw, K. (1989). Demarginalizing the intersection of race and sex: A Black feminist critique of antidiscrimination doctrine, feminist theory and antiracist politics. The University of Chicago Legal Forum, 1989, 139. https://scholarship.law.columbia.edu/faculty_scholarship/3007MATH Google Scholar

- Custers, B. (2022). New digital rights: Imagining additional fundamental rights for the digital era. Computer Law & Security Review, 44, 105636. https://doi.org/10.1016/j.clsr.2021.105636Article MATH Google Scholar

- Daigle, B., & Khan, M. (2020). The EU General Data Protection Regulation: An Analysis of Enforcement Trends by EU Data Protection Authorities. Journal of International Commerce & Economics, 2020, 1. https://heinonline.org/HOL/Page?handle=hein.journals/jice2020%26id=1%26div=%26collection=MATH Google Scholar

- Davies, B. (2020). The right not to know and the obligation to know. Journal of Medical Ethics, 46(5), 300–303. https://doi.org/10.1136/medethics-2019-106009Article MATH Google Scholar

- de Ruijter, A. (2016). European integration in the field of human health. Journal of European Integration, 38(7), 837–843. https://doi.org/10.1080/07036337.2016.1228333Article MATH Google Scholar

- de Witte, B. (2006). Non-market values in internal market legislation. In N. N. Shuibhne (Ed.), Regulating the internal market (pp. 61–86). Edward Elgar.MATH Google Scholar

- Delhomme, V. (2017). Internal market as an excuse: The case of EU anti-tobacco legislation. College of Europe.MATH Google Scholar

- Delvaux, M. (n.d.). REPORT with recommendations to the Commission on Civil Law Rules on Robotics| A8-0005/2017| European Parliament. Retrieved July 3, 2024, from https://www.europarl.europa.eu/doceo/document/A-8-2017-0005_EN.html

- Duffourc, M. N., & Gerke, S. (2023). The proposed EU Directives for AI liability leave worrying gaps likely to impact medical AI. Npj Digital Medicine, 6(1), Article1. https://doi.org/10.1038/s41746-023-00823-wArticle Google Scholar

- Durán, J. M., & Jongsma, K. R. (2021). Who is afraid of black box algorithms? On the epistemological and ethical basis of trust in medical AI. Journal of Medical Ethics, 47(5), 329–335. https://doi.org/10.1136/medethics-2020-106820Article MATH Google Scholar

- Evans, H., & Snead, D. (2024). Why do errors arise in artificial intelligence diagnostic tools in histopathology and how can we minimize them? Histopathology, 84(2).

- Finco, M. G., Mir, N., Gresham, G., & Huisingh-Scheetz, M. (2024). Ethical considerations of digital health technology in older adult care. The Lancet Healthy Longevity, 5(1), e12–e13. https://doi.org/10.1016/S2666-7568(23)00236-2Article Google Scholar

- Ghazarian, A., Zheng, J., El-Askary, H., Chu, H., Fu, G., & Rakovski, C. (2021). Increased risks of re-identification for patients posed by Deep learning-based ECG identification algorithms. In 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), 1969–1975. https://doi.org/10.1109/EMBC46164.2021.9630880Chapter Google Scholar